Command: device, vr, xr

Usage:

device device-type [ status ]

device-options

The device command sets modes for certain external devices,

where the device-type can be:

See also:

making movies,

Augmented reality videos with ChimeraX,

Mixed reality video recording in ChimeraX

The status of a mode can be

on (synonyms true, True, 1)

or off (synonyms false, False, 0).

Device-specific options are described below.

•

device snav

[ status ]

[ fly true | false ]

[ speed factor ]

The command device snav enables manipulation

with a SpaceNavigator® 3D mouse from

3Dconnexion.

The fly option indicates whether the force applied to the device

should be interpreted as acting on the camera (true), where

pushing forward zooms in because it moves the camera viewpoint toward the scene,

or as acting on the models in the scene (false, default), where

pushing forward zooms out because it pushes the models away.

In either case, however, it is the camera that actually moves.

The speed option sets a sensitivity factor for motion

relative to the device (default 1.0).

Decreasing the value (for example, to 0.1) reduces sensitivity to give

slower motion, whereas increasing the value has the opposite effect.

See also: mousemode

•

webcam

[ status ]

[ foregroundColor color-spec ]

[ saturation N ]

[ colorPopup true | false ]

[ flipHorizontal true | false ]

[ framerate fps ]

[ size xpixels,ypixels ]

[ name camera-name ]

The webcam command (same as device webcam)

shows webcam video as a flat background behind the ChimeraX scene,

except that items similar to the specified foreground color

(default lime green

)

will appear in front.

This allows pointing with a real item to features in the ChimeraX scene.

The saturation option sets the stringency of color-matching

(default 5, details below) and the colorPopup option (default

false) sets whether pausing the cursor over the background shows

a popup balloon reporting the color of the underlying pixel

as R,G,B color components on the 0-100 scale.

The flipHorizontal option (default true)

shows the left-right mirror image of the webcam video so that pointing

rightward while facing the webcam and screen is shown as

pointing rightward in the video.

If size is not specified,

the video resolution is whatever the webcam can provide given the target

framerate value (default 25 frames per second), or if

that rate is not possible, the closest framerate supported by the camera.

If size is specified, the closest size supported by the camera

is provided, and matching the framerate is given second priority.

The name option allows using a specific webcam when more than one

is available. The webcam command reports

in the Log the names

of the available webcams and which one it is using.

If it is not using the desired one, close the

existing webcam model and reissue the webcam command with the

name option, enclosing the full camera-name in quotation marks,

for example:

webcam name "FaceTime HD Camera (Built-in)"

When the command is issued, any unspecified options are set to their defaults.

An exception is that

to change the framerate and size after a previous webcam command,

it is necessary to use webcam off first and then webcam on

with the new values.

Note that other applications in use at the same time as ChimeraX

may change the webcam resolution or framerate unexpectedly.

Not all webcams will work with this command, only ones that use certain

RGB and YUV pixel formats. If an unsupported pixel format is used,

an error message will appear and the video will not be

shown in the ChimeraX background.

Color-matching details: only the relative intensities of the

R,G,B components of the foreground color are important.

Pixels in the webcam view are considered as matching if the intensity

order of the R,G,B components matches that of the foreground color

(for example, G>B>R) and these components are separated by at least the

saturation value on the 0-100 scale. With the default parameters,

for example (foregroundColor G>B=R and saturation 5),

any pixels with the green component at least 5 greater than both the

red and blue components (on the 1-100 scale)

will be shown in front of the ChimeraX scene.

•

( vr | xr )

[ status ]

[ mirror true | false ]

[ passthrough true | false ]

[ center true | false ]

[ clickRange r ]

[ gui tool1[,tool2 ... ]]

[ simplifyGraphics true | false ]

[ multishadowAllowed true | false ]

[ nearClipDistance dn ]

[ farClipDistance df ]

[ roomPosition matrix | report ]

The vr or xr command (same as device vr or device xr)

enables a virtual reality mode for systems supported by

SteamVR

or OpenXR,

including Quest 3, Quest 2, Valve Index, Oculus Rift S, HTC Vive,

and others. SteamVR must be installed separately in order to use

the vr command, and with Meta Quest headsets the

Meta Quest Link app must be installed and started.

The xr command allows using Meta Quest headsets without SteamVR installed.

For details and related issues,

see ChimeraX virtual reality.

See also:

vr button,

vr roomCamera,

meeting,

buttonpanel,

camera,

view,

making movies,

ChimeraX videos including

how coronaviruses

get into cells

VR status, model positions in the room, and hand-controller

button assignments are saved in

ChimeraX session files.

The VR model positions and button assignments

are also retained through uses of vr on and vr off,

but not after exiting ChimeraX.

The mirror option indicates whether to show the ChimeraX scene

in the desktop graphics window. If true (default),

the VR headset right-eye view is shown,

with graphics waitForVsync

automatically set to false so that VR rendering will not slow down

to the desktop rendering rate.

If false, no graphics are shown in the desktop display, allowing

all graphics computing resources to be dedicated to VR headset rendering.

Updating the graphics window can cause flicker in the VR headset

because refreshing the computer display (nominally 60 times per second)

slows rendering to headset. ChimeraX turns off syncing to

vertical refresh if possible. Another way to mirror is to use

SteamVR's menu entry to display mirror window.

The passthrough option controls whether the physical room is visible

in the VR headset using passthrough video provided by cameras on the headset.

This option is only available with the xr command, and only with Meta Quest 3,

2, and Pro headsets. It uses the Meta Quest OpenXR passthrough extension.

Currently (April 2024) Meta requires enabling options

in the Meta Quest Link PC app:

Settings / Beta / Developer Runtime Features

and

Settings / Beta / Passthrough over Meta Quest Link,

and these require a Meta developer account.

The center option (default true)

centers and scales models in the room.

The clickRange option sets the depth range for

picking objects with the hand-controller cones, where r

is the maximum distance from the tip of the cone to the object,

in units of meters in the room (default 0.10).

Limiting the range prevents accidentally picking far-away objects.

The gui option specifies which ChimeraX tool panels to show in VR

when the hand-controller button assigned to that function

(by default,

Vive menu or Oculus B/Y) is pressed.

Any combination of tools can be specified as

a comma-separated list of one or more tool names,

as listed in the Tools menu and shown for most tools in

their title bars. Tools may also be custom panels created with the

buttonpanel command.

If the gui option is not given,

the same tools as currently shown in the desktop display

(including the Toolbar,

and on Windows only, the ChimeraX main menu)

will be shown.

The simplifyGraphics option (default true)

reduces the maximum level of detail in VR by limiting the

total atom and bond triangles to one million each.

This helps to maintain full rendering speed. The previous total-triangle

limits are restored when the VR mode is turned off. Normally,

the maximum atom and bond triangles are set to five million each.

See also: graphics

Ambient shadowing or “ambient occlusion”

requires calculating shadows from multiple directions.

This may make rendering too slow for VR and cause stuttering in the headset,

especially if the number of directions (the

lighting multiShadow

parameter) is high.

By default (multishadowAllowed false),

when the number of directions is > 0, enabling VR switches to the

simple lighting preset, which

does not use ambient shadowing.

With multishadowAllowed true,

enabling VR does not automatically change the lighting.

In VR, the lighting presets with ambient shadowing

(full,

soft, and

gentle)

use 8 directions instead of 64.

The nearClipDistance and farClipDistance options

set clipping-plane positions in meters from the viewer

(defaults dn = 0.1 and

df = 500). The difference from using the

clip command is that these options

keep the planes at the specified distances from the viewer as the viewpoint

changes.

If surfaces are clipped, for speed-of-interaction purposes it may be useful

to hide the planar caps

(command surface cap false).

When the mode is enabled, the roomPosition option can be used to

specify the transformation between room coordinates (in meters,

with origin at room center) and scene coordinates (typically in Å)

or to simply report the current transformation

in the Log.

The transformation matrix is given as

12 numbers separated by commas only, corresponding to a 3x3 matrix

for rotation and scaling, with a translation vector in the fourth column.

Ordering is row-by-row, such that the translation vector is given as

the fourth, eighth, and twelfth numbers. Example:

vr room 20,0,0,0,0,20,0,0,0,0,20,0

The frequency of label reoriention

is automatically decreased when VR is enabled and

restored when VR is turned off.

•

( vr button | xr button )

button-name

[ function ]

[ command "string" ]

[ hand left | right ]

|

|

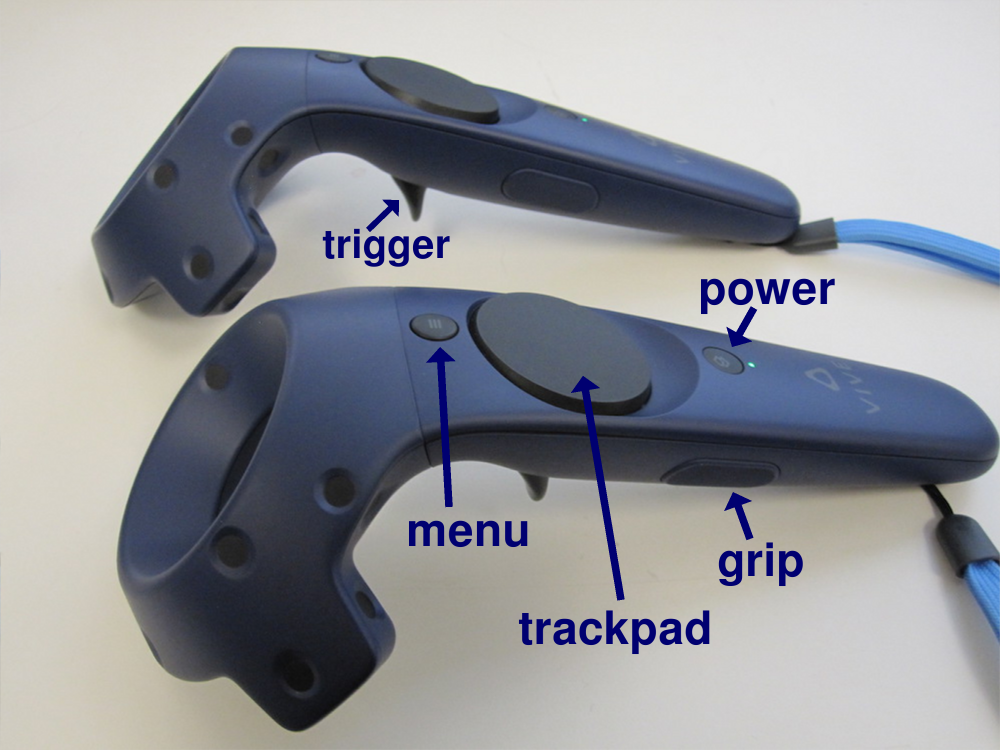

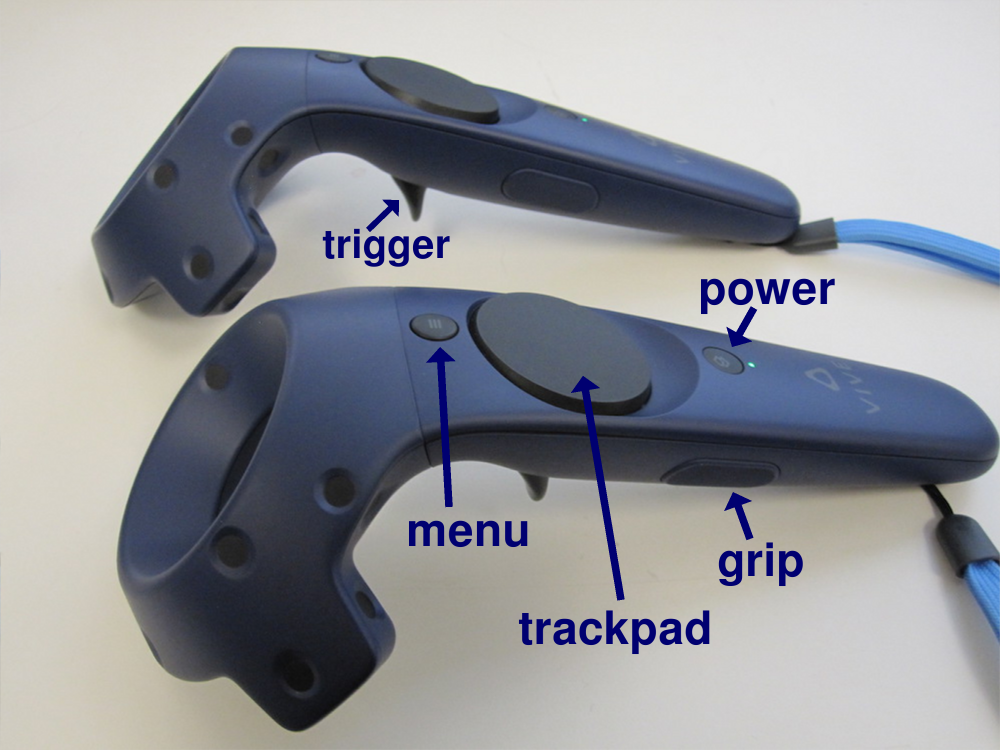

Vive hand controllers

|

|

| Oculus “Touch” hand controllers

|

The vr button or xr button command assigns modes (functions) to

the hand-controller buttons in virtual reality,

as a scriptable alternative to clicking icons

to assign modes interactively.

The available function names are as listed

above for

mousemode,

plus the following VR-specific modes:

- button lock

– toggle (inactivate/activate) all other hand-controller buttons

- recenter – center and scale models in the room

- show ui – collectively show, hide, move ChimeraX tool panels

Alternatively, with the command option,

command(s) to execute upon button click

can be given as a string enclosed in quotation marks.

The string may contain multiple commands with semicolon separators.

The available function names can be listed in the

Log with

command usage vr button,

and should be enclosed in quotation marks if they contain spaces.

The function can also be given as the word default to reset to

default functions.

If no function or command assignment is given, the current

function is reported in the Log.

The hand option can be given to assign a function

to the specified button of only one hand controller;

otherwise, those on both controllers will be assigned.

The button-name can be:

- trigger

- grip

- touchpad (synonym thumbstick)

– Vive trackpad or Oculus thumbstick

- menu

– Vive menu button

- A

– a button on the Oculus right hand controller

(hand option ignored)

- B

– a button on the Oculus right hand controller

(hand option ignored; same as menu with hand right)

- X

– a button on the Oculus left hand controller

(hand option ignored)

- Y

– a button on the Oculus left hand controller

(hand option ignored; same as menu with hand left)

- all

– all hand-controller buttons, primarily for use in the command:

vr button all default

Initial defaults are to translate and rotate with triggers,

zoom with Vive touchpad or Oculus A button,

recenter with Vive grip or Oculus X button,

and show ui with the Vive menu or Oculus B/Y button

(more...).

The only function settings that work

by tilting the Oculus thumbstick are rotate

,

zoom

,

zoom

,

contour level

,

contour level

,

play map series

,

play map series

,

and play coordinates

,

and play coordinates

.

.

•

( vr roomCamera | xr roomCamera )

[ status ]

[ fieldOfView angle ]

[ width w ]

[ backgroundColor color-spec ]

[ savePosition true | false ]

[ showHands true | false | toggle ]

[ showPanels true | false | toggle ]

[ tracker true | false ]

[ saveTrackerMount true | false ]

The vr roomCamera or xr roomCamera command sets up a separate camera view fixed

in the VR room coordinates, useful for making video tutorials.

The camera view is shown in the desktop graphics window

and as “picture in picture” in the VR headset.

See also: camera,

making movies

The vr roomCamera command can only be used in

virtual reality

after using vr on,

and the xr roomCamera command can only be used

after using xr on.

In VR, the room camera is shown as a rectangle at the camera position

(initially with its center 1.5 meters above the floor and offset 2 meters

horizontally from the room center), facing the user and showing

what the camera sees.

The width of the rectangle is given in meters (default 1.0),

and the height is chosen to match the aspect ratio of the desktop graphics

window. The default fieldOfView for the room camera is 90°.

The background color of the room camera can be set separately from the VR

background; default is a dark gray (10,10,10)

so that the rectangle is visible against a black scene background.

The room camera rectangle

is a model that can be selected

and then moved in VR with the

hand-controller mode

assigned by clicking either icon:

(or with the vr button command).

(or with the vr button command).

Specifying savePosition true saves the current position and orientation

of the room camera in the preferences.

The showHands and showPanels options control whether the

hand-controller cones and VR gui panels are shown

in the room camera view, respectively (default true, whereas

toggle reverses the current setting).

These options do not affect what is shown in the headset.

Two options allow using a

Vive Tracker

device to control the room camera position:

- Using tracker true synchronizes the room camera to the

position and orientation of the device, with offsets

(if any) previously saved with saveTrackerMount true.

- Using saveTrackerMount true saves in the

preferences

the current room camera translation and rotation relative to the device.

Thus, the room camera can be moved by hand as described above,

and then its offsets from the device (for when tracker true is used)

in position and orientation saved with saveTrackerMount true.

•

realsense

[ status ]

[ size x,y ]

[ dsize dx,dy ]

[ framesPerSecond fps ]

[ align true | false ]

[ denoise true | false ]

[ denoiseWeight weight ]

[ denoiseColorTolerance tolerance ]

[ denoise true | false ]

[ projector true | false ]

[ angstromsPerMeter apm ]

[ skipFrames N ]

[ setWindowSize true | false ]

The command realsense (same as device realsense)

enables blending video from an Intel RealSense depth-sensing camera

with ChimeraX graphics to make augmented reality videos.

If VR is enabled, this command automatically starts a

virtual-reality room camera

which renders the models to be blended with the RealSense camera image.

It also sets the graphics window size to match the RealSense camera image size.

This command is only available after installation of the

RealSense bundle from the

ChimeraX Toolshed (menu: Tools... More Tools...).

The size and dsize options set camera image resolution

for color and depth, respectively. Each takes a pair of comma-separated values

indicating the pixel dimensions in X and Y (defaults: size 960,540 and

dsize 1280,720). The framesPerSecond option gives the video

capture rate (default 30). The align option indicates whether

to compensate the offset between the color and depth cameras in the device

(default true, which slows rendering).

The denoise option (default true)

specifies whether to depth-denoise by averaging depth over time at pixels

when their colors remain fairly constant using parameters

denoiseWeight (default 0.1) and denoiseColorTolerance

(default 10). Denoising details are given below.

The projector option enables spraying the room with IR dots

from a projector on the camera device for better depth detection (default

false, as the IR beams interfere with other devices that use IR

such as Vive VR tracking). Normally the camera device is used at the

same time as VR, which in turn sets the scale factor

of the scene relative to the room. However, if VR is not

in use, the angstromsPerMeter option can be used to specify the

relative scale (default 50).

The skipFrames option indicates how many ChimeraX graphics update frames

to skip before getting a new camera frame (default 2, meaning to get

a new camera frame at every 3rd ChimeraX graphics frame).

The setWindowSize option causes the graphics window size to be changed

to match the camera color image resolution (default true).

Denoising details:

The depth values from RealSense cameras fluctuate rapidly over time by

small amounts, and the depth value for some pixels is unknown.

This depth noise causes flickering in the blended video where depths

are fluctuating or unknown, especially at boundaries

between video objects and computer-generated objects.

The denoise true option reduces the depth noise by averaging

depth over time at pixels when their colors remain fairly constant.

When the color of a pixel changes by more than 10 on a RGBA scale of 255,

its depth is always updated immediately to reduce motion blur.

The averaging blends current depth values with the previously

used depth with weight 0.1, so it roughly averages depth from the

previous 10 frames. Pixels with unknown depth occur for two reasons.

In some areas of the video frame such as blank white walls the camera is

unable to judge depth because it relies on matching features in the

two stereo IR cameras, and if there are no discernible features to match,

the depth is reported as 0. This problem can be reduced by using

projector true, which projects a dense array of invisible

infrared laser dots in the room to add texture (but can

interfere with tracking by VR headsets that also use infrared).

The second cause of missing depth values is that only one stereo camera sees a

part of the room because the other stereo camera is blocked by an object.

This happens at boundaries of foreground objects in the room.

To minimize both of these effects (but especially the second),

the denoise option keeps track of the maximum depth seen at each pixel

and its color, representing the room background. If a depth value is not

available for a pixel for a frame, it will use the maximum depth value,

provided that the color of the pixel is close to the color of the background.

This background depth fill does not help for pixels for which the

camera has never reported a depth value. The denoising algorithm

is based on the assumption that the camera is not moving.

•

leapmotion

[ status ]

[ headMounted true | false ]

[ pointerSize size ]

[ pinchThresholds pinch,unpinch ]

[ modeLeft mouse | vr ]

[ modeRight mouse | vr ]

[ width width ]

[ maxDelay delay ]

[ center x,y,z ]

[ facing x,y,z ]

[ cord x,y,z ]

[ debug true | false ]

The command leapmotion (same as device leapmotion)

enables using a Leap Motion hand-tracking device with ChimeraX

on Windows (details...).

The command is only available after installation of the Windows

LeapMotion bundle

from the ChimeraX Toolshed (menu: Tools... More Tools...).

The Leap Motion device contains cameras that track hand and finger positions.

It can be placed on the desktop below the hands, or attached to a headset

facing the screen (specified with headMounted true).

The head-mounted position works best. When in view of the device,

each hand is represented in the graphics window

by a pointer displayed as 3D crosshairs,

yellow crosshairs for the left and green for the right.

The pointerSize option sets pointer diameter

(the length of each crosshair), with default 1.0 Å.

In ChimeraX, the pointers are models named

left hand and right hand

under a top-level model named Leap Motion.

Pinching the thumb to the index finger is equivalent to a button click.

The left hand acts as the left mouse button and the right hand as

the right mouse button (see mousemode;

initial default assignments are rotate and translate, respectively).

For button assignments that involve clicking specific objects,

picking is along the line of sight from the pointer position.

The mode of cursor tracking for each hand can be mouse

(in the 2D plane of the screen) or vr (default,

in 3D including hand orientation).

The pinchThresholds are pinch detection sensitivities in the range

0-1.0, with defaults 0.9 for pinching and 0.6 for unpinching.

The width option gives the hand-motion range in millimeters

(default 250) corresponding to the full width of the graphics window.

The maxDelay value (default 0.2 seconds) prevents using

older previous hand positions when ChimeraX is responding slowly.

Device position can be specified with the center,

facing, and cord options. Each of these options takes

three floating-point values separated by commas only.

The center option gives the center of tracking space in millimeters

relative to the Leap device, with x along the device long axis

increasing away from the cord and y along the sensor-facing direction

(default 0,250,0).

The facing option gives the direction the sensor is facing

in screen coordinates

(default 0,0,−1 if head-mounted, otherwise 0,1,0).

The cord option gives the direction in which the cord leaves the device

in screen coordinates

(default 1,0,0 if head-mounted, toward the right,

otherwise −1,0,0, toward the left).

The debug option specifies whether to write LeapC library

debugging messages (default false).

The messages are sent to stderr in the Windows console from which ChimeraX

was started, not to the Log.

•

lookingglass

[ status ]

[ viewAngle angle ]

[ fieldOfView fov ]

[ depthOffset offset ]

[ verbose true | false ]

[ quilt true | false ]

[ saveQuiltImage png-file ]

[ deviceNumber N ]

The command lookingglass (same as device lookingglass)

enables showing the contents of the ChimeraX graphics window in

a Looking Glass holographic display

(details...).

This device uses 45 images spanned by the horizontal viewAngle

(default 40°).

Each image is rendered with the specified fieldOfView

(default 22° recommended by the device manufacturer).

See also: camera

|

ChimeraX Looking Glass support works with Windows, Intel Mac, and Linux

machines, but has only been tested on the LookingGlass 8.9" Gen1 display

(details...), which is no longer sold.

|

The depthOffset (default 0.0 Å)

is the position of the center of the bounding box of displayed models

relative to the mid-depth plane of the Looking Glass display, where

the focus is best.

Positive values push the models further back in the display.

Enabling a Looking Glass automatically assigns adjusting the depth offset

to Shift-scroll with the mouse or trackpad.

This lookingglass depth mode can be assigned to other buttons using

the mousemode command.

The verbose option (default false)

gives technical device parameters in the

Log.

The quilt option (default false) shows the 45 images as

tiled in a separate window and is used for debugging,

whereas the saveQuiltImage option allows saving a PNG image

of pixel size 4096 by 4096 with 9 rows and 5 columns.

The png-file may be specified as a file pathname,

enclosed in quotation marks if it includes spaces,

or the word browse

to specify it interactively in a file browser window.

The deviceNumber option (not tested) designates a

specific Looking Glass device when more than one is connected.

UCSF Resource for Biocomputing, Visualization, and Informatics /

April 2024

![]() ,

zoom

,

zoom

![]() ,

contour level

,

contour level

![]() ,

play map series

,

play map series

![]() ,

and play coordinates

,

and play coordinates

![]() .

.